Neste post irei falar um pouco sobre o método de compressão de dados do SQL Server e como podemos utilizar esse recurso para compactar todos os objetos de um database. A compressão de dados pode ser configurada para os seguintes objetos de banco de dados:

- Para uma tabela que é armazenada como um heap.

- Para uma tabela que é armazenada como um índice clustered.

- Para um índice nonclustered.

- Para uma view indexada.

- Para tabelas e índices particionados, a opção de compressão pode ser configurada para cada partição, e as várias partições de um objeto podem conter diferentes tem configurações de compressão.

Lembrando que a compressão não está disponível para objetos de sistema e a configuração de compressão não é aplicada automaticamente a índices nonclustered. Sendo assim, cada índice nonclustered deve ser configurado individual e manualmente.

ROW COMPRESSION LEVEL

This compression feature takes into account the type of variable data structures that define a column. Row Compression Level is a compression level that does not use any compression algorithm.

O principal objetivo da Row Compression Level é reduzir o armazenamento de dados do tipo fixos, ou seja, quando você está permitindo Row Level Compression você está apenas mudando o formato de armazenamento físico dos dados que estão associados a um tipo de dados.

Row Level Compression estende o formato de armazenamento vardecimal por armazenar dados de todos os tipos de comprimento fixo em um formato de armazenamento de comprimento variável. Este tipo de compressão irá remover quaisquer bytes extras no tipo de dados fixo. Não há absolutamente nenhuma mudança necessária no aplicativo.

Por exemplo, temos uma coluna CHAR (100) que está usando o Row Compression Level só usará a quantidade de armazenamento definida pelos dados. Como assim? Vamos armazenar a frase “SQL Server 2008″ na coluna, a frase contem apenas 15 caracteres e apenas esses 15 caracteres são armazenados ao contrario dos 100 que foram definidos pela coluna, portanto, você tem uma economia de 85% no espaço de armazenamento.

PAGE COMPRESSION LEVEL

Nas versões anteriores do SQL Server cada valor era armazenado na página, independentemente se o mesmo valor já havia aparecido na mesma coluna para algumas outras linhas dentro de uma página. No SQL Server 2008, o valor redundante ou duplicado será armazenado apenas uma vez dentro da página e o será referenciado em todas as outras ocorrências, dessa forma temos o Page Compression Level.

Basicamente, o Page Compression Level é um superconjunto de compressão ROW e leva em conta os dados redundantes em uma ou mais linhas em uma determinada página. Ele também usa a compactação de prefixo e dicionário.

O método Page Compression Level é mais vital, pois permite que os dados comuns sejam compartilhados entre as linhas de uma determinada página.

This type of compression uses the following techniques:

- ROW COMPRESSION: Already seen above.

- PREFIX COMPRESSION: For each column on a page duplicate prefixes are identified. These prefixes are stored in Information Compression (CI) headers, which reside after the page header. A reference number is assigned to these prefixes and this reference number is used wherever these prefixes are being used, decreasing the amount of bytes used.

- DICTIONARY COMPRESSION: Looks for duplicate values off the page and stores them in the CI. The main difference between Prefix Compression and Dictionary Compression is that Prefix is restricted to just one column whereas Dictionary is applicable for the complete page.

Once the Prefix Compression has completed, the Dictionary Compression is applied and searches for repeated values anywhere on the page and stores them in the CI area. Unlike Prefix Compression, Dictionary Compression is not constrained to a column and can replace repeated values, which occur anywhere on a page.

Estimando o ganho de espaço

No SQL Server 2008 existem duas maneiras de estimar a economia de espaço para armazenamento de tabelas e índices. O primeiro método é usar uma SP de sistema chamada sp_estimate_data_compression_savings e o segundo método é usar o Assistente de Compactação de Dados.

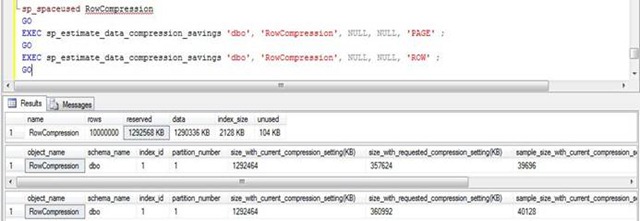

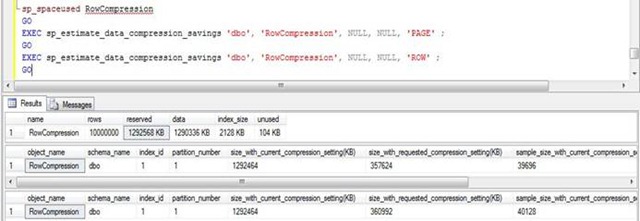

Primeiro vamos usar a sp_estimate_data_compression_savings onde:

1º parâmetro é o nome do schema;

2º parâmetro é o nome do objeto;

3º parâmetro é o ID do índice;

4º parâmetro é o ID da Partição

5º parâmetro é o tipo de compressão;

Notem as colunas size_with_current_compression_setting(KB) e size_with_requested_compression_setting(KB), essas colunas mostras o valor atual e o valor após a compressão. Dessa forma podemos saber quanto de espaço iremos ganhar com a aplicação da compressão.

Agora vamos utilizar o assistente.

Clique com o botão direito em cima da tabela escolha a opção Storege -> Manage Compression

Será aberta a tela do assistente de compressão

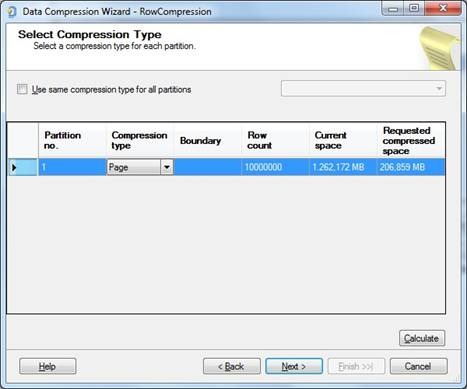

Na próxima tela do assiste, você pode testar o tipo de compressão e antes de aplicar você pode calcular o espaço para verificar qual obteve a maior taxa de compressão de dados.

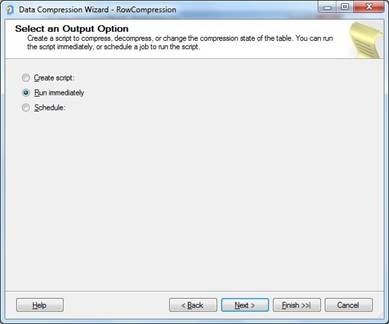

Seguindo com o assistente de compressão, escolhi a opção PAGE, que geralmente obtém o melhor resultado, e em seguida irá perguntar o que fazer: Apenas gerar o script, executar a compactação ou agendar a alteração para ativar a compactação.

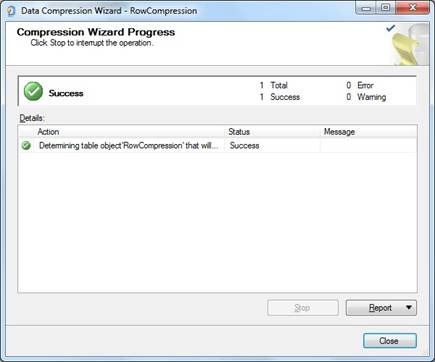

Por fim, será mostrado na tela uma mensagem de sucesso na compressão dos dados

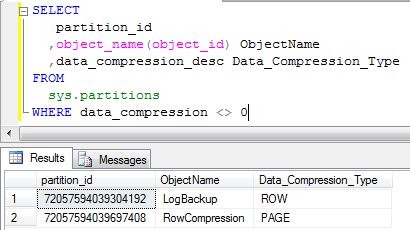

Você pode verificar todos os seus objetos e quais níveis de compressão eles estão clicando com o botão direito em cima do objeto, na guia Storage, a opção Compression informa o tipo de compressão ou utilizando o comando:

|

1 2 3 4 5 6 7 8 9 10 |

SELECT A.[partition_id], A.[object_id], object_name(A.[object_id]) AS [object_name], data_compression_desc FROM sys.partitions A join sys.objects B on A.[object_id] = B.[object_id] WHERE B.is_ms_shipped = 0 |

Aplicando compressão em um banco de dados inteiro

Nesse post já vimos o quão benéfica é a compressão de dados, principalmente para o storage. Pois bem, vamos colocar isso em prática. Criei uma procedure que permite compactar todos os objetos de um database, de forma fácil e rápida. O código da procedure está disponível aqui:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 |

CREATE PROCEDURE [dbo].[stpCompacta_Database] ( @Ds_Database SYSNAME, @Fl_Rodar_Shrink BIT = 1, @Fl_Parar_Se_Falhar BIT = 1, @Fl_Exibe_Comparacao_Tamanho BIT = 1, @Fl_Metodo_Compressao_Page BIT = 1 ) AS BEGIN SET NOCOUNT ON DECLARE @Ds_Query VARCHAR(MAX), @Ds_Comando_Compactacao VARCHAR(MAX), @Ds_Metodo_Compressao VARCHAR(20) = (CASE WHEN @Fl_Metodo_Compressao_Page = 1 THEN 'PAGE' ELSE 'ROW' END), @Nr_Metodo_Compressao VARCHAR(20) = (CASE WHEN @Fl_Metodo_Compressao_Page = 1 THEN 2 ELSE 1 END) IF (OBJECT_ID('tempdb..#Comandos_Compactacao') IS NOT NULL) DROP TABLE #Comandos_Compactacao CREATE TABLE #Comandos_Compactacao ( Id BIGINT IDENTITY(1, 1), Tabela SYSNAME, Indice SYSNAME NULL, Comando VARCHAR(MAX) ) IF (@Fl_Exibe_Comparacao_Tamanho = 1) BEGIN SET @Ds_Query = ' SELECT (SUM(a.total_pages) / 128) AS Vl_Tamanho_Tabelas_Antes_Compactacao FROM [' + @Ds_Database + '].sys.tables t WITH(NOLOCK) INNER JOIN [' + @Ds_Database + '].sys.indexes i WITH(NOLOCK) ON t.OBJECT_ID = i.object_id INNER JOIN [' + @Ds_Database + '].sys.partitions p WITH(NOLOCK) ON i.object_id = p.OBJECT_ID AND i.index_id = p.index_id INNER JOIN [' + @Ds_Database + '].sys.allocation_units a WITH(NOLOCK) ON p.partition_id = a.container_id WHERE i.OBJECT_ID > 255 ' EXEC(@Ds_Query) END SET @Ds_Query = 'INSERT INTO #Comandos_Compactacao( Tabela, Indice, Comando ) SELECT DISTINCT A.name AS Tabela, NULL AS Indice, ''ALTER TABLE ['' + ''' + @Ds_Database + ''' + ''].['' + C.name + ''].['' + A.name + ''] REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = ' + @Ds_Metodo_Compressao + ')'' AS Comando FROM [' + @Ds_Database + '].sys.tables A INNER JOIN [' + @Ds_Database + '].sys.partitions B ON A.object_id = B.object_id INNER JOIN [' + @Ds_Database + '].sys.schemas C ON A.schema_id = C.schema_id WHERE B.data_compression <> ' + @Nr_Metodo_Compressao + ' -- NONE AND B.index_id = 0 AND A.type = ''U'' UNION SELECT DISTINCT B.name AS Tabela, A.name AS Indice, ''ALTER INDEX ['' + A.name + ''] ON ['' + ''' + @Ds_Database + ''' + ''].['' + C.name + ''].['' + B.name + ''] REBUILD PARTITION = ALL WITH ( STATISTICS_NORECOMPUTE = OFF, ONLINE = OFF, SORT_IN_TEMPDB = OFF, DATA_COMPRESSION = ' + @Ds_Metodo_Compressao + ')'' FROM [' + @Ds_Database + '].sys.indexes A INNER JOIN [' + @Ds_Database + '].sys.tables B ON A.object_id = B.object_id INNER JOIN [' + @Ds_Database + '].sys.schemas C ON B.schema_id = C.schema_id INNER JOIN [' + @Ds_Database + '].sys.partitions D ON A.object_id = D.object_id AND A.index_id = D.index_id WHERE D.data_compression <> ' + @Nr_Metodo_Compressao + ' -- NONE AND D.index_id <> 0 AND B.type = ''U'' ORDER BY Tabela, Indice ' EXEC(@Ds_Query) DECLARE @Qt_Comandos INT = (SELECT COUNT(*) FROM #Comandos_Compactacao), @Contador INT = 1, @Ds_Mensagem VARCHAR(MAX), @Nr_Codigo_Erro INT = (CASE WHEN @Fl_Parar_Se_Falhar = 1 THEN 16 ELSE 10 END) WHILE(@Contador <= @Qt_Comandos) BEGIN SELECT @Ds_Comando_Compactacao = Comando FROM #Comandos_Compactacao WHERE Id = @Contador BEGIN TRY SET @Ds_Mensagem = 'Executando comando "' + @Ds_Comando_Compactacao + '"... Aguarde...' RAISERROR(@Ds_Mensagem, 10, 1) WITH NOWAIT EXEC(@Ds_Comando_Compactacao) END TRY BEGIN CATCH SELECT ERROR_NUMBER() AS ErrorNumber, ERROR_SEVERITY() AS ErrorSeverity, ERROR_STATE() AS ErrorState, ERROR_PROCEDURE() AS ErrorProcedure, ERROR_LINE() AS ErrorLine, ERROR_MESSAGE() AS ErrorMessage; SET @Ds_Mensagem = 'Falha ao executar o comando "' + @Ds_Comando_Compactacao + '"' RAISERROR(@Ds_Mensagem, @Nr_Codigo_Erro, 1) WITH NOWAIT RETURN END CATCH SET @Contador = @Contador + 1 END IF (@Fl_Exibe_Comparacao_Tamanho = 1) BEGIN SET @Ds_Query = ' SELECT (SUM(a.total_pages) / 128) AS Vl_Tamanho_Tabelas_Depois_Compactacao FROM [' + @Ds_Database + '].sys.tables t WITH(NOLOCK) INNER JOIN [' + @Ds_Database + '].sys.indexes i WITH(NOLOCK) ON t.OBJECT_ID = i.object_id INNER JOIN [' + @Ds_Database + '].sys.partitions p WITH(NOLOCK) ON i.object_id = p.OBJECT_ID AND i.index_id = p.index_id INNER JOIN [' + @Ds_Database + '].sys.allocation_units a WITH(NOLOCK) ON p.partition_id = a.container_id WHERE i.OBJECT_ID > 255 ' EXEC(@Ds_Query) END IF (@Fl_Rodar_Shrink = 1) BEGIN SET @Ds_Query = ' USE ' + @Ds_Database + ' DBCC SHRINKFILE (' + @Ds_Database + ', 1) WITH NO_INFOMSGS ' EXEC(@Ds_Query) END IF (@Qt_Comandos > 0) PRINT 'Database "' + @Ds_Database + '" compactado com sucesso!' ELSE PRINT 'Nenhum objeto para compactar no database "' + @Ds_Database + '"' END |

Usage example:

|

1 2 3 4 5 6 |

EXEC dbo.stpCompacta_Database @Ds_Database = 'Testes', -- sysname @Fl_Rodar_Shrink = 1, -- bit @Fl_Parar_Se_Falhar = 0, -- bit @Fl_Exibe_Comparacao_Tamanho = 1, -- bit @Fl_Metodo_Compressao_Page = 1 -- bit |

Obs: Não recomendo utilizar a opção @Fl_Rodar_Shrink em ambientes de produção.

oi Dirceu, na compressão Page da tabela os indices sofrem a compressão também?

A compressão Page compacta também os indices da tabela?

Parabéns! Realizei a migração do SQL server 2014 para 2016. Nele apliquei a procedure por completo. Banco do ERP Protheus com 400 GB. Após 16 horas o resultado foi uma base de 96 GB. Executei rebuild, reorder e estatisticas. Como foi feriado ontem, testei a base homologação. Não resisti coloquei em produção! Até agora tudo certo!

Excelente post.

Ótimo post! Parabéns!

No script, teria como adicionar um case avaliando o percentual de scans x updates,

então caso mais scan compacta em page, caso mais update compacta em row?

Dirceu, obrigado por compartilhar.

Realmente, isso me ajudará e muito!!!

Tenho um dúvida quanto ao backup dessa base compactada. Por exemplo, se minha base antes da compactação possuía 500GB e após a compactação, a mesma foi para 200GB, o backup será com base nos 200GB ou ele desconsiderará a compactação e utilizará os 500GB?

Oi Rodrigo, excelente dúvida. A compactação é sempre em relação aos dados, embora caso você já utilize backup compactado, provavelmente os dados já estão sendo comprimidos.

Talvez seja melhor trocar o comando para:

DBCC SHRINKFILE (1, 1) WITH NO_INFOMSGS

Esse comando não recebe o nome do banco mas sim o file_name ou file_id e da forma que o Dirceu deixou, só funciona se o file_name for igual ao database name !

É verdade, André. Corrigi o post.

Valeu!!

Dirceu, mto obrigado pelo post, ótima didática!

Você conseguiria me tirar uma dúvida simples ?

Se um coluna tiver o comprimento varchar (10) onde todos ou parte de seus caracteres são preenchidos obrigatoriamente com espaços em branco até completar seu tamanho máximo.

Exemplo:

Coluna-> Nome Varchar (10)

Dado presente na coluna -> ‘ Kin’ = (17 campos em branco + 3 caracteres)

A compactação afetaria isso de alguma forma ou é transparente?

Pergunto isso porque a aplicação final utiliza esses espaços para compor seu layout de importação,

obrigatoriamente ela irá procurar e buscar os 10 caracteres, mesmo que parte deles sejam em branco.

Fala Kin!

Excelente dúvida hein.. Vale até um post só pra explicá-la 🙂

Olá Dirceu,

Excelente matéria parabéns, fiz um teste aqui numa base de teste a compressão chegou a 120% até assustei com o resultado.

Só gostaria de tirar uma dúvida utilizei o seguinte parâmetro @Fl_Rodar_Shrink = 1, — bit e minha base que era 74GB ficou no SQL Server com 56GB disponível para limpar, porém a função sozinha não liberou o espaço.

Tive que ir em Task / Shrink / Files e só depois disso que liberou.

A dúvida é com o parâmetro setado não deve ter feito a liberação do espaço automaticamente?

Novamente parabéns pelo post.

Paulo, o shrink que realmente libera o espaço no disco, mas ele causa um efeito negativo na performance (fragmenta os índices e dados). Por isso é recomendado sempre rodar um rebuild de todos os índices após o shrink (cuidado pra não causar lentidão em produção durante o rebuild).

A compactação libera espaço dentro do arquivo de dados do sql server, ou seja, você vai poder armazenar mais dados com o espaço já alocado, mas para diminuir o espaço em disco, só com shrink