Hey Guys!

Como vocês estão ?

Neste post vou demonstrar como criar seu primeiro cubo multidimensional no modelo estrela (Star schema). Esse post acabou sendo feito sem a idealização que costumo fazer, pois estava escrevendo outro post para o blog e precisei criar um cubo simples no Analysis Services para a demonstração e entendi como uma oportunidade de falar um pouco sobre BI também, assunto que trabalho no meu dia a dia e realmente, quase não escrevo sobre. Resolvi então, criar esse post demonstrando o passo-a-passo para a criação desse cubo.

Alguns conceitos de BI

Modelagem multidimensional

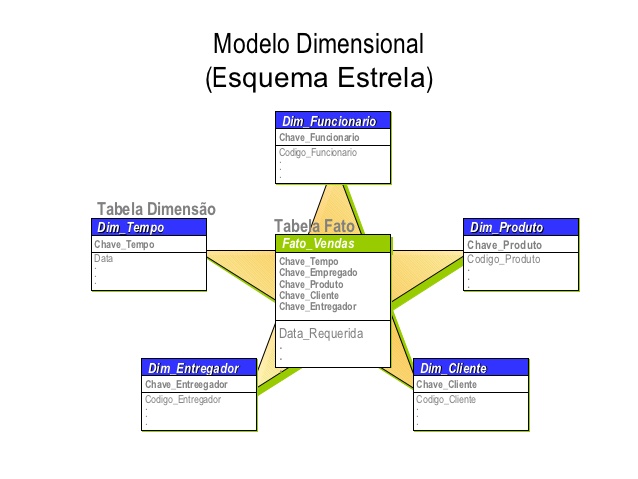

A modelagem multidimensional, ou dimensional como às vezes é chamada, é a técnica de modelagem de banco de dados para o auxílio às consultas do Data Warehouse nas mais diferentes perspectivas. A visão multidimensional permite o uso mais intuitivo para o processamento analítico pelas ferramentas OLAP (On-line Analytical Processing).

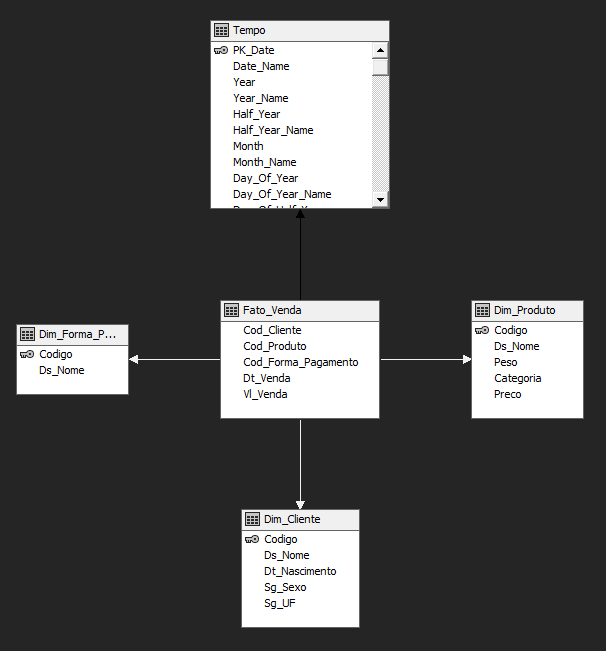

Toda modelagem dimensional possuem dois elementos imprescindíveis: as tabelas Fatos e as tabelas Dimensões. Ambas são obrigatórias e possuem característica complementares dentro de um Data Warehouse.

OLAP (On-line Analytical Processing)

O OLAP possui um conjunto de técnicas para o tratamento dos dados contidos na visão multidimensional do Data Warehouse. As ferramentas OLAP podem ser de diferentes tipos: MOLAP, ROLAP ou HOLAP.

O OLAP multidimensional (MOLAP) é o tipo de ferramenta que utiliza estrutura de banco de dados multidimensional. O OLAP relacional (ROLAP) utiliza a arquitetura relacional dos dados, onde o banco de dados possui a estrutura tradicional. Já o OLAP híbrido (HOLAP) é a junção das duas anteriores, utilizando os melhores aspectos e recursos de cada um dos dois tipos.

Dimensões

As Dimensões são os descritores dos dados oriundos da Fato. Possui o caráter qualitativo da informação e relacionamento de “um para muitos” com a tabela Fato. É a Dimensão que permite a visualização das informações por diversos aspectos e perspectivas. Ex: Cadastro de clientes, cadastro de formas de pagamento, etc.

Medidas

As medidas são valores numéricos que representam a Fato e a performance de um indicador de negócio associado às dimensões. Ex: Valor da venda, Quantidade de vendas, Ticket médio, etc.

Fatos

As Fatos contém as métricas. Possui o caráter quantitativo das informações descritivas armazenadas nas Dimensões. É onde estão armazenadas as ocorrências do negócio e possui relacionamento de “muitos para um” com as tabelas periféricas (Dimensão). É uma tabela que possui apenas as medidas e os ID’s que se ligam às dimensões para exibir as informações completas referente aos dados que serão visualizados.

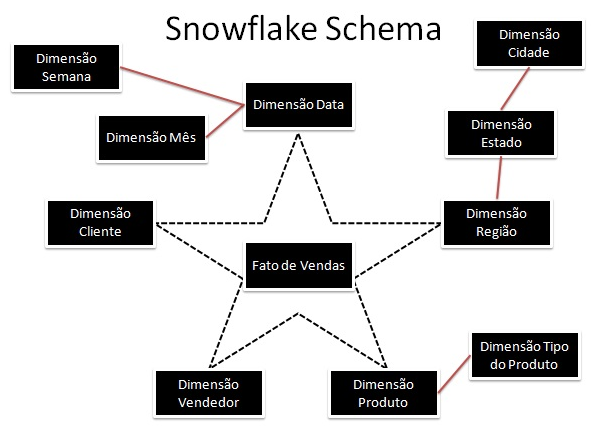

Modelos da modelagem multidimensional

A modelagem dimensional possui dois modelos: o modelo estrela (star schema) e o modelo floco de neve (snow flake). Cada um com aplicabilidade diferente a depender da especificidade do problema.

As Dimensões do modelo estrela são desnormalizados, ao contrário do snow flake, que parcialmente possui normalização. A estrutura relacional diferencia-se da estrutura multidimensional principalmente devido a normalização, pouca redundância e a frequência de atualizações suportadas. A estrutura multidimensional possui, normalmente, desnormalização de tabelas, alta redundância e suporta periodicidade de atualizações de dados muito menor do que uma estrutura relacional convencional.

Gerando as dimensões e Fatos (ETL)

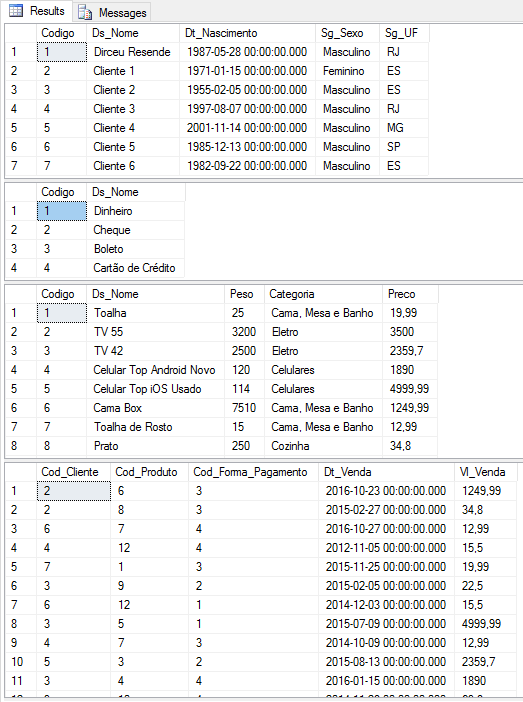

Nesta parte da criação do cubo, vou gerar as dimensões e Fatos com dados aleatórios. Claro que esses dados são utilizados apenas para demonstração. Num cenário real, não se deve utilizar dados aleatórios em análises de BI para criação de cubos multidimensionais, pois a análise não teria um valor real.

Para a geração aleatória das datas utilizei a função fncRand(), disponível no post SQL Server – Msg 443 Invalid use of a side-effecting operator ‘rand’ within a function.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 |

------------------------------------------------------------------------ -- CRIAÇÃO DAS DIMENSÕES ------------------------------------------------------------------------ IF (OBJECT_ID('dbo.Dim_Cliente') IS NOT NULL) DROP TABLE dbo.Dim_Cliente CREATE TABLE dbo.Dim_Cliente ( Codigo INT IDENTITY(1, 1), Ds_Nome VARCHAR(100), Dt_Nascimento DATETIME, Sg_Sexo VARCHAR(20), Sg_UF VARCHAR(2), ) INSERT INTO dbo.Dim_Cliente VALUES ('Dirceu Resende', '1987-05-28', 'Masculino', 'RJ'), ('Cliente 1', '1971-01-15', 'Feminino', 'ES'), ('Cliente 2', '1955-02-05', 'Masculino', 'ES'), ('Cliente 3', '1997-08-07', 'Masculino', 'RJ'), ('Cliente 4', '2001-11-14', 'Masculino', 'MG'), ('Cliente 5', '1985-12-13', 'Masculino', 'SP'), ('Cliente 6', '1982-09-22', 'Masculino', 'ES') IF (OBJECT_ID('dbo.Dim_Forma_Pagamento') IS NOT NULL) DROP TABLE dbo.Dim_Forma_Pagamento CREATE TABLE dbo.Dim_Forma_Pagamento ( Codigo INT IDENTITY(1, 1), Ds_Nome VARCHAR(100) ) INSERT INTO dbo.Dim_Forma_Pagamento VALUES ('Dinheiro'), ('Cheque'), ('Boleto'), ('Cartão de Crédito') IF (OBJECT_ID('dbo.Dim_Produto') IS NOT NULL) DROP TABLE dbo.Dim_Produto CREATE TABLE dbo.Dim_Produto ( Codigo INT IDENTITY(1, 1), Ds_Nome VARCHAR(100), Peso INT, Categoria VARCHAR(50), Preco FLOAT ) INSERT INTO dbo.Dim_Produto VALUES ('Toalha', 25, 'Cama, Mesa e Banho', 19.99), ('TV 55', 3200, 'Eletro', 3500), ('TV 42', 2500, 'Eletro', 2359.70), ('Celular Top Android Novo', 120, 'Celulares', 1890), ('Celular Top iOS Usado', 114, 'Celulares', 4999.99), ('Cama Box', 7510, 'Cama, Mesa e Banho', 1249.99), ('Toalha de Rosto', 15, 'Cama, Mesa e Banho', 12.99), ('Prato', 250, 'Cozinha', 34.80), ('Talher', 25, 'Cozinha', 22.50), ('Panela', 250, 'Cozinha', 69.80), ('Microondas', 1450, 'Eletro', 369.99), ('Encosto de Mesa', 35, 'Cama, Mesa e Banho', 15.50) ------------------------------------------------------------------------ -- CRIAÇÃO DA FATO ------------------------------------------------------------------------ IF (OBJECT_ID('dbo.Fato_Venda') IS NOT NULL) DROP TABLE dbo.Fato_Venda CREATE TABLE dbo.Fato_Venda ( Cod_Cliente INT, Cod_Produto INT, Cod_Forma_Pagamento INT, Dt_Venda DATETIME, Vl_Venda FLOAT ) DECLARE @Contador INT = 1, @Total INT = 1000 WHILE(@Contador <= @Total) BEGIN INSERT INTO dbo.Fato_Venda SELECT TOP 1 (SELECT TOP 1 Codigo FROM dbo.Dim_Cliente ORDER BY NEWID()) AS Cod_Cliente, Codigo AS Cod_Produto, (SELECT TOP 1 Codigo FROM dbo.Dim_Forma_Pagamento ORDER BY NEWID()) AS Cod_Forma_Pagamento, DATEADD(DAY, dbo.fncRand(1885), '2012-01-01') AS Dt_Venda, Preco AS Vl_Venda FROM dbo.Dim_Produto ORDER BY NEWID() SET @Contador += 1 END |

Visualizando a massa aleatória de testes:

|

1 2 3 4 |

SELECT * FROM dbo.Dim_Cliente SELECT * FROM dbo.Dim_Forma_Pagamento SELECT * FROM dbo.Dim_Produto SELECT * FROM dbo.Fato_Venda |

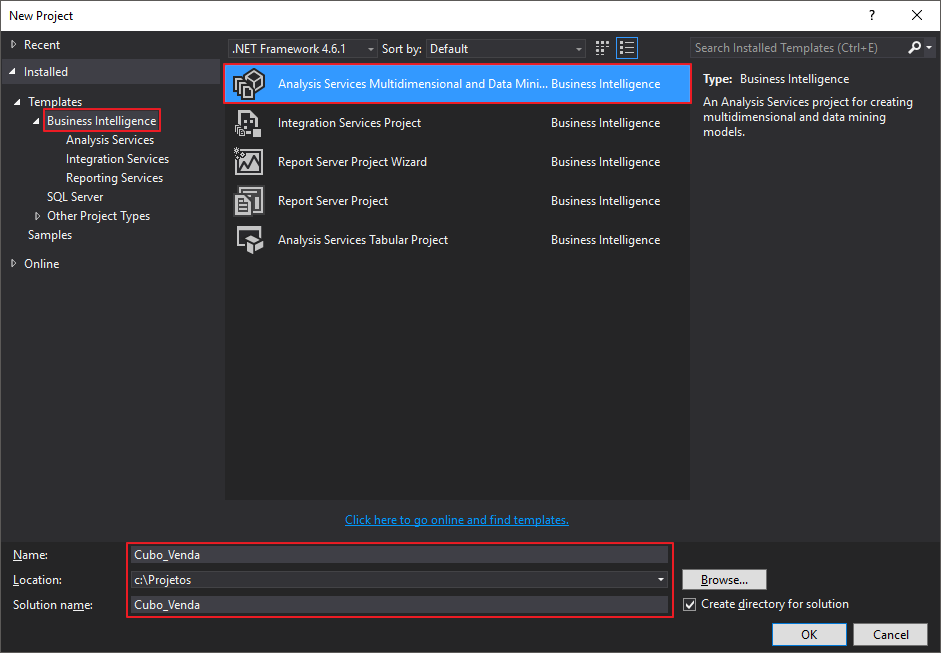

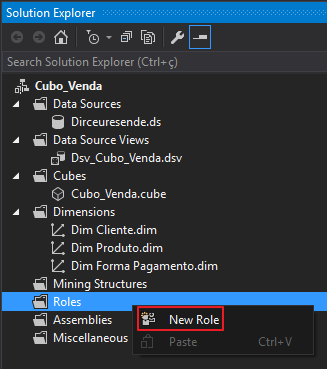

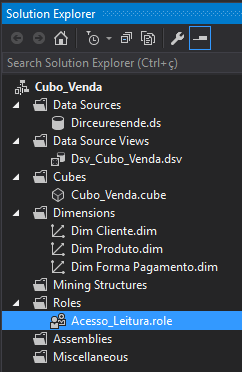

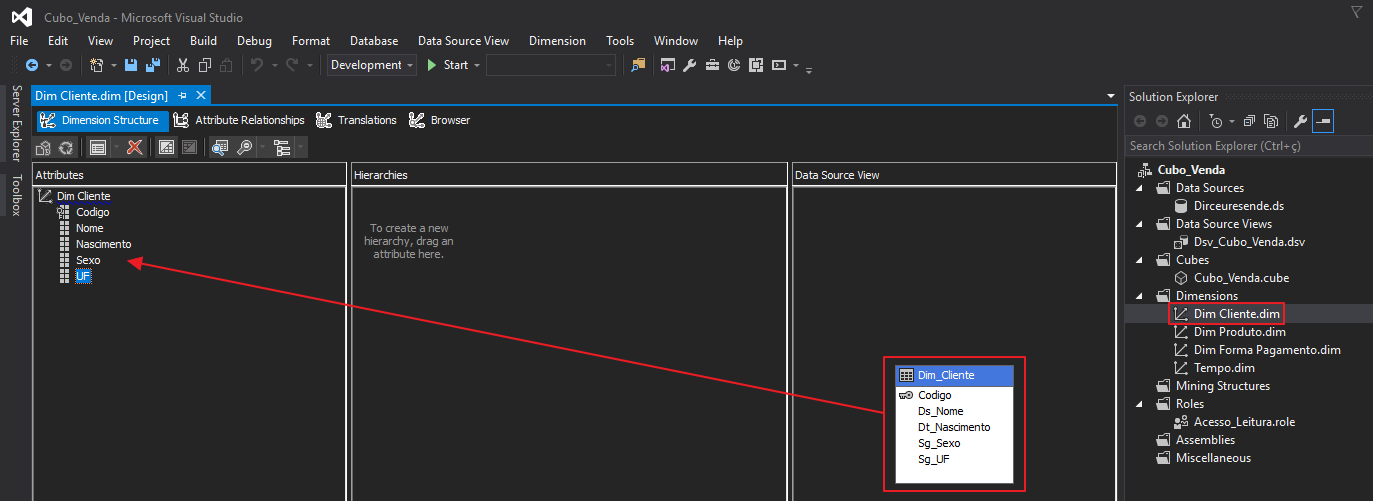

Criando o cubo no Visual Studio (Data Tools)

Antigamente conhecimento como Business Intelligence Development Studio (BIDS) ou Visual Studio Shell, o Microsoft Data Tools foi incorporado ao Visual Studio a partir da versão 2015 e agora fazem parte de um só produto, sendo agora apenas um plugin do Visual Studio, que pode ser baixado acessando este link.

Para começar, abra o SQL Server Data Tools 2015 e no menu File > New > Project e selecione o tipo de projeto “Analysis Services Multidimensional and Datamining Project”.

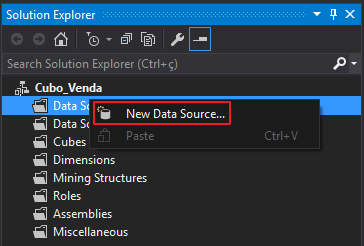

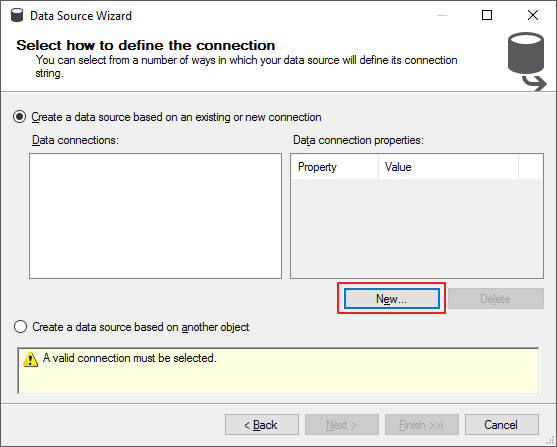

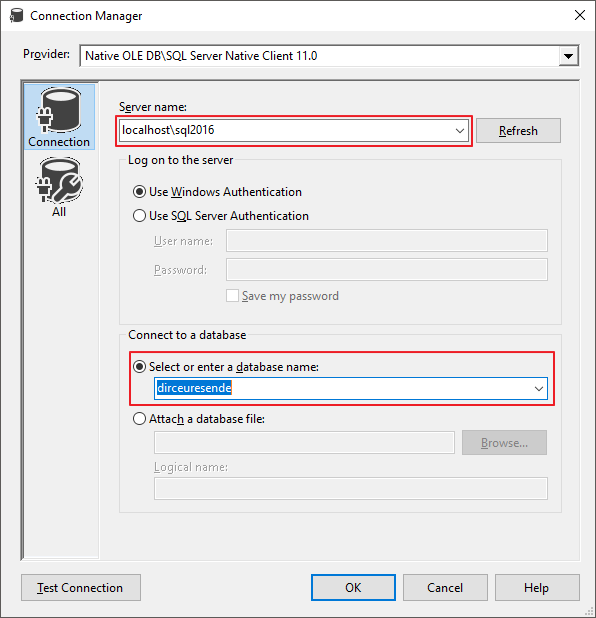

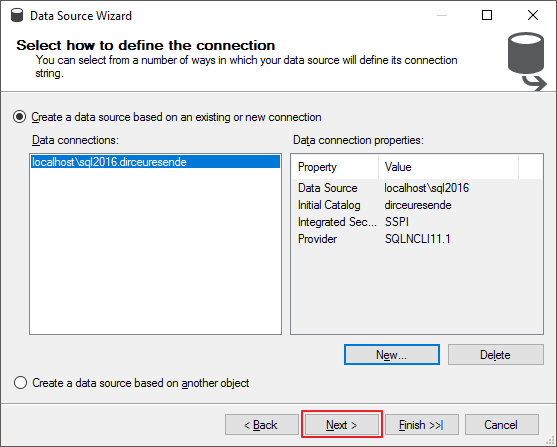

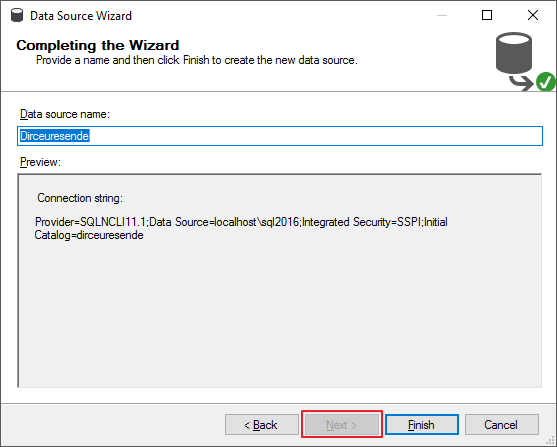

Criando uma nova fonte de dados (Data Source)

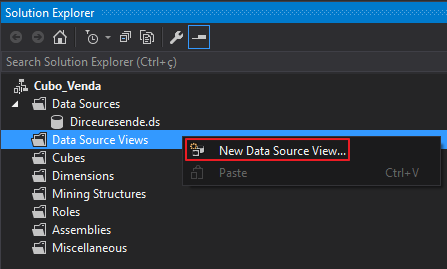

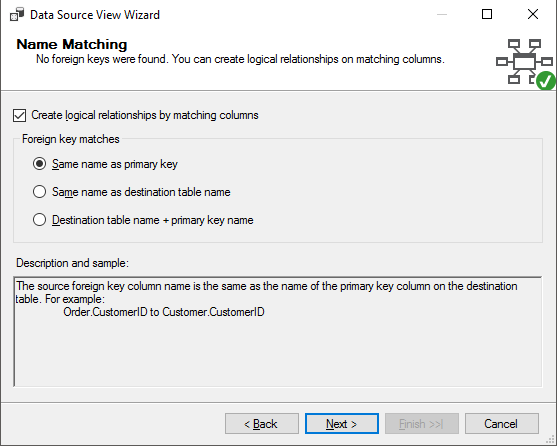

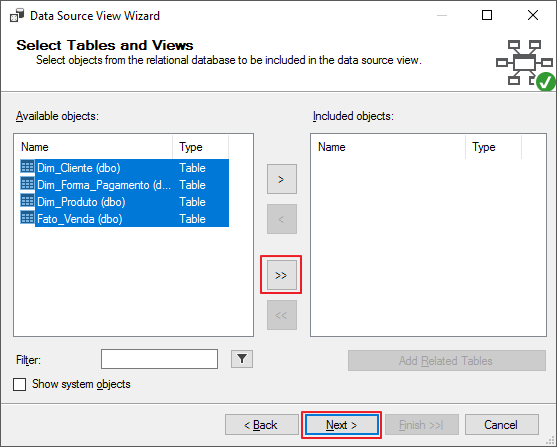

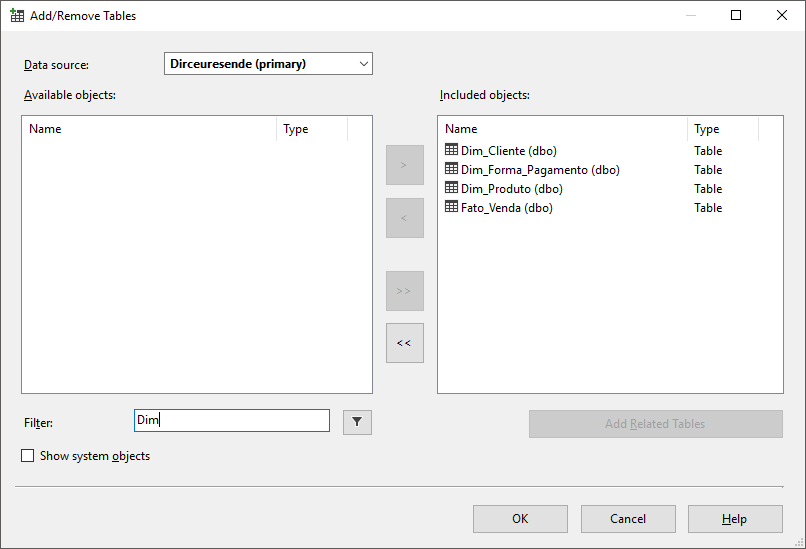

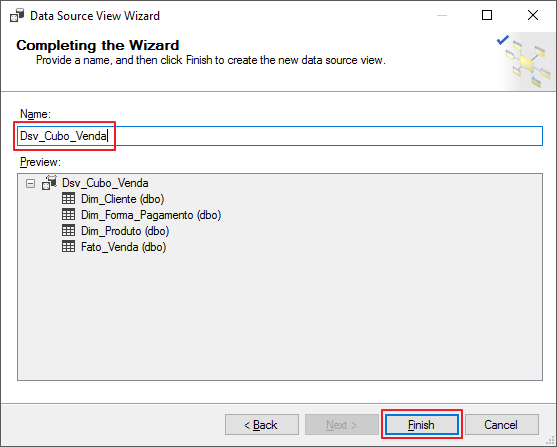

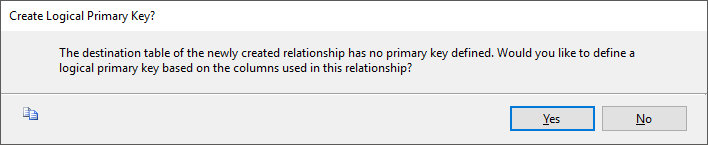

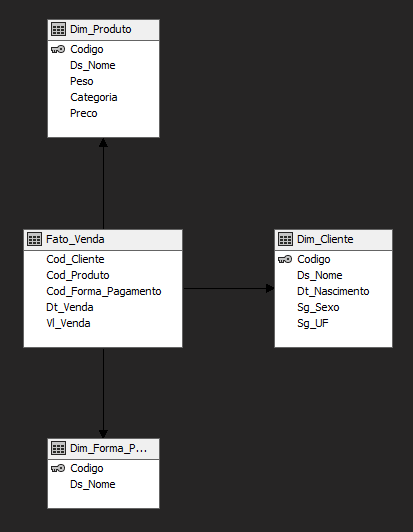

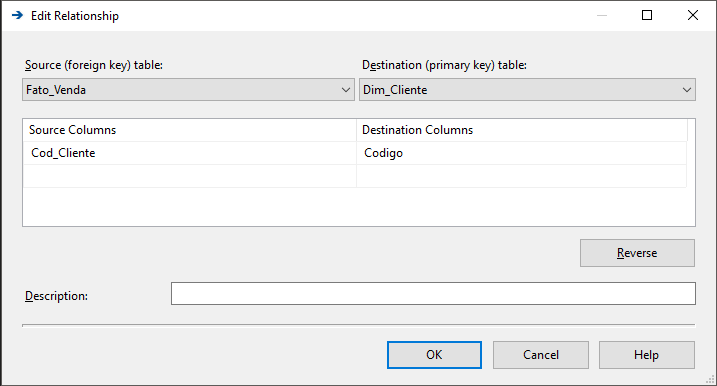

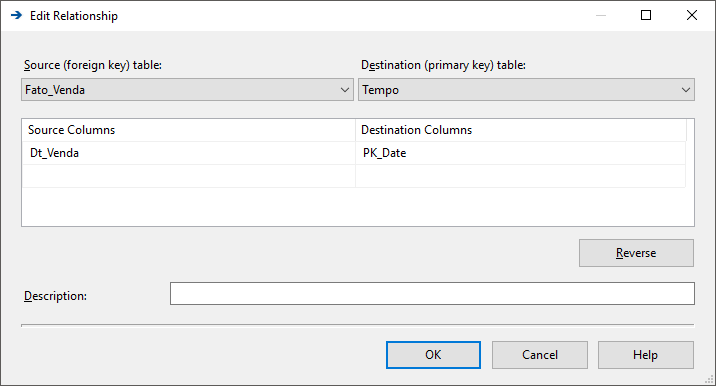

Visualizar conteúdoCriando um novo Data Source View

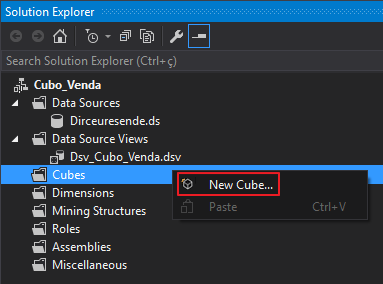

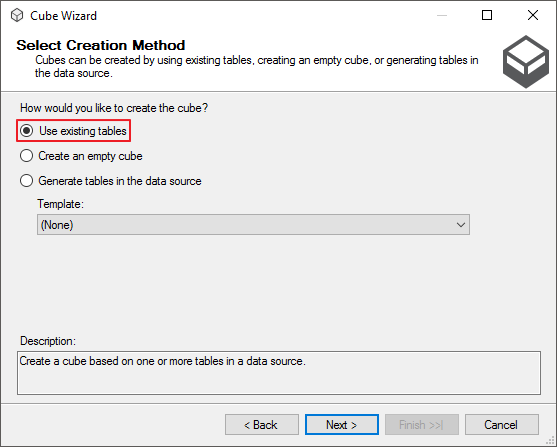

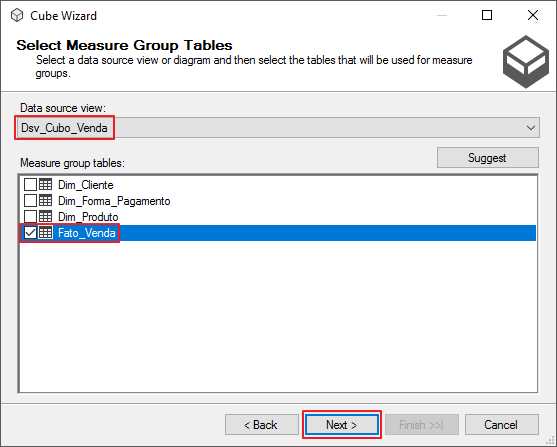

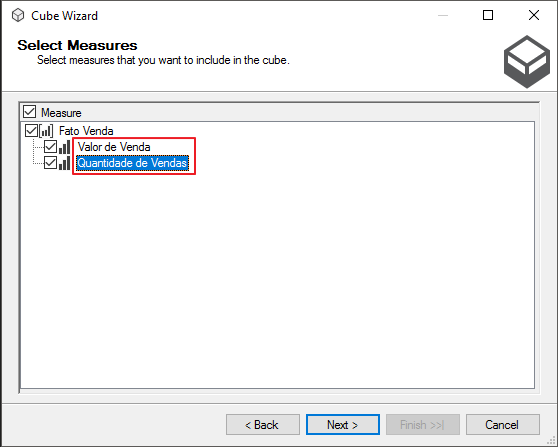

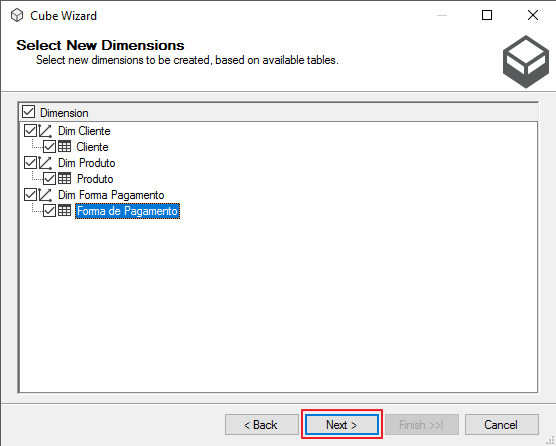

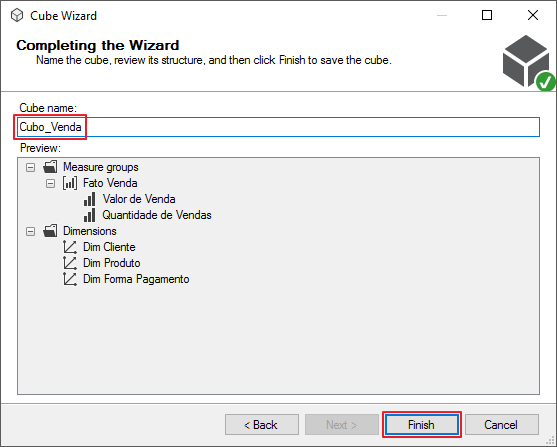

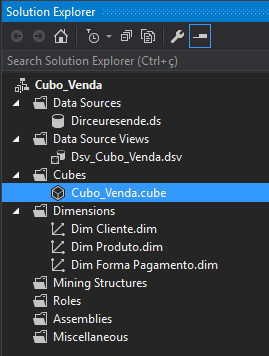

Visualizar conteúdoCriando um novo Cubo

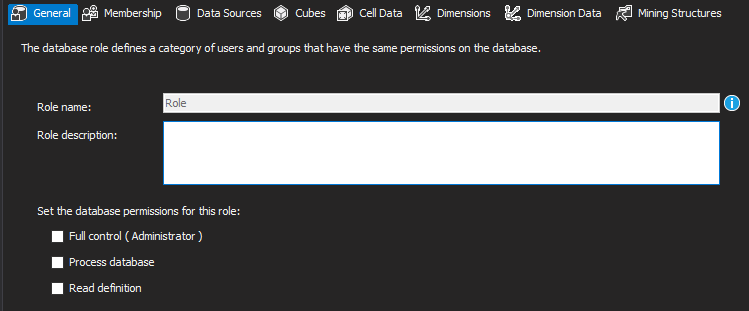

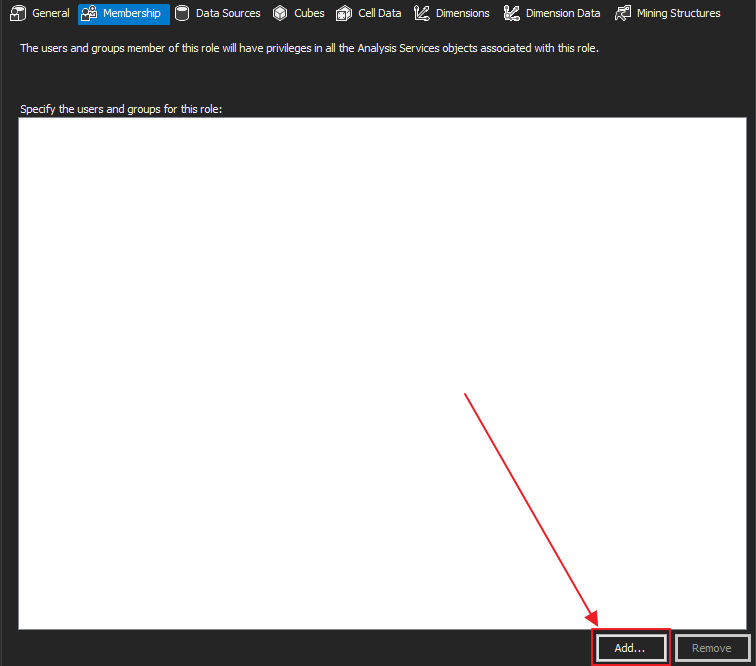

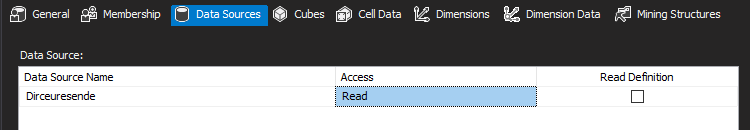

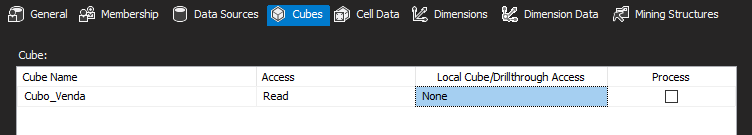

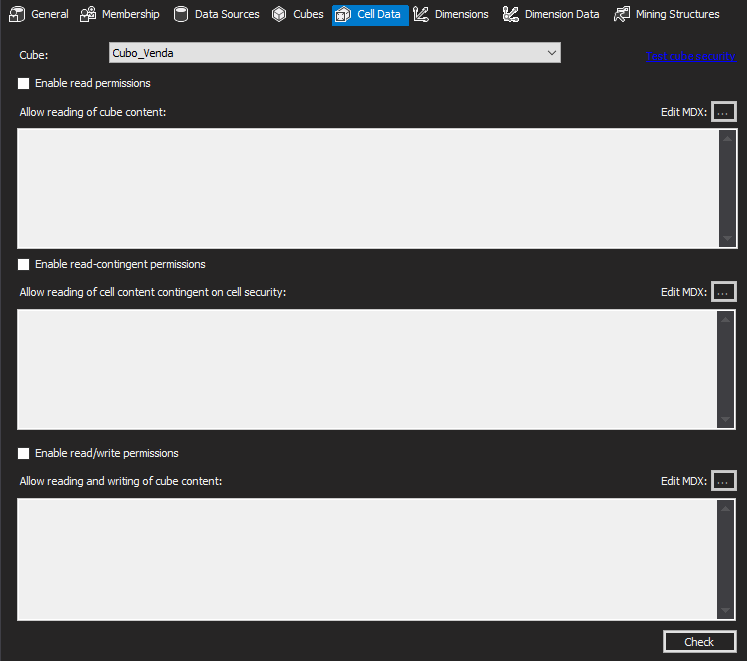

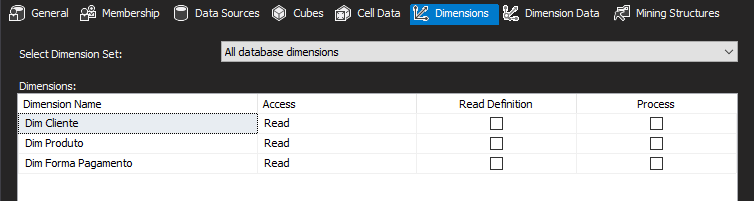

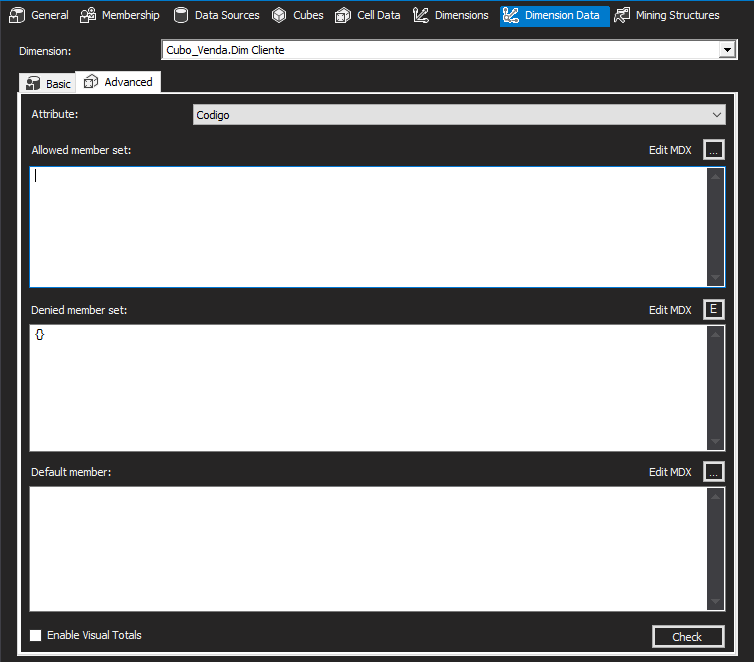

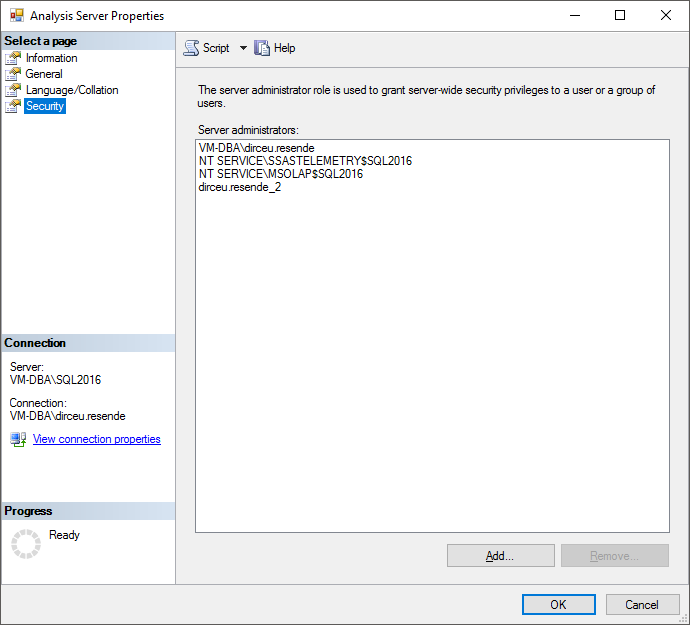

Visualizar conteúdoGerenciando permissões

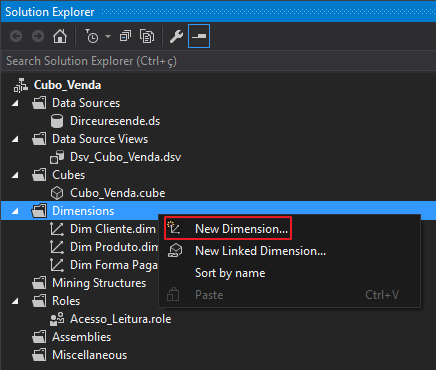

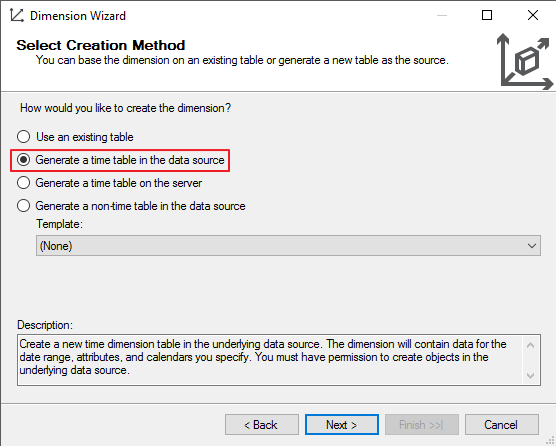

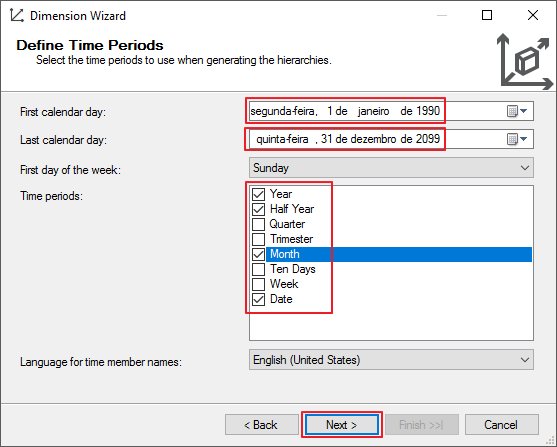

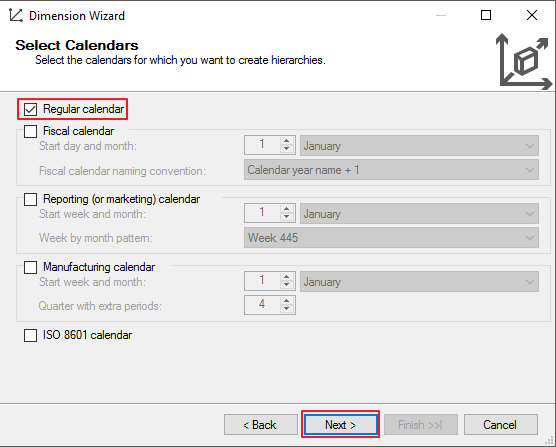

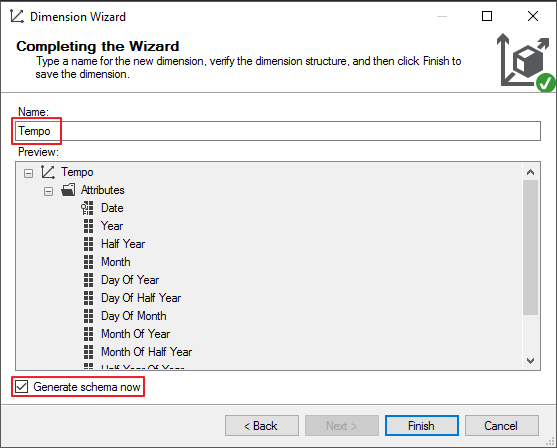

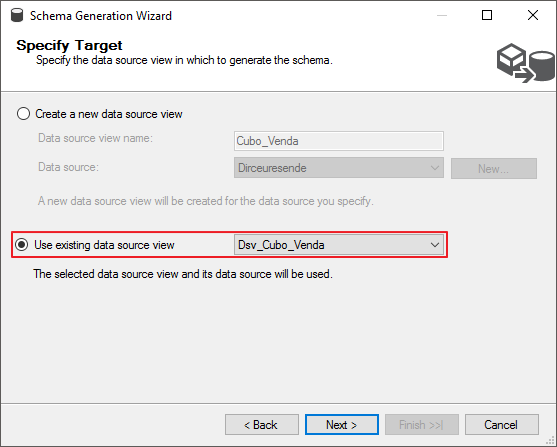

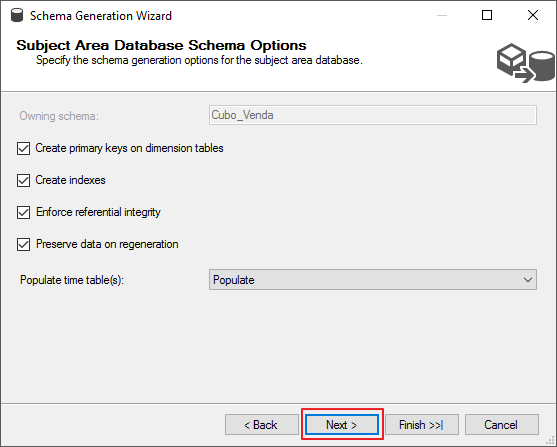

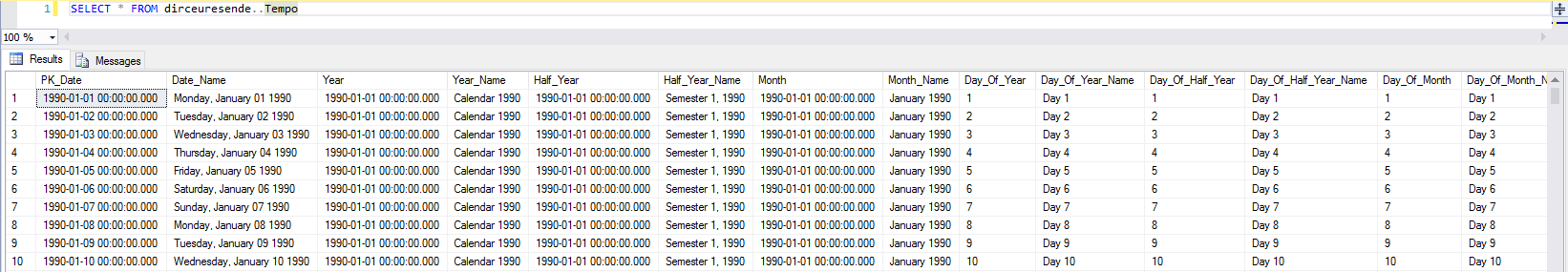

Visualizar conteúdoCriando a dimensão de Tempo

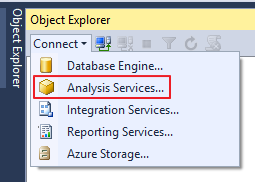

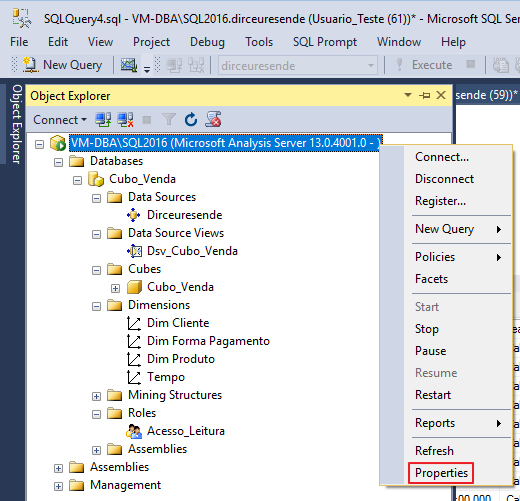

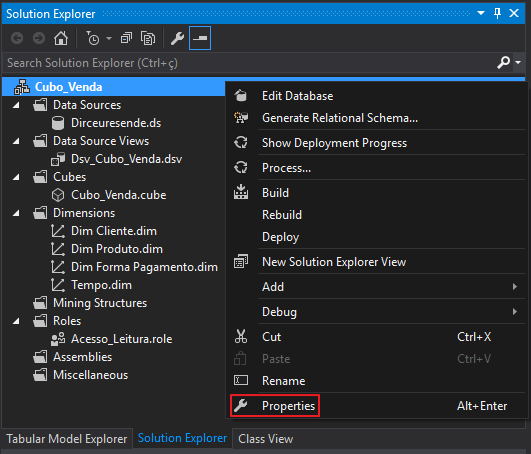

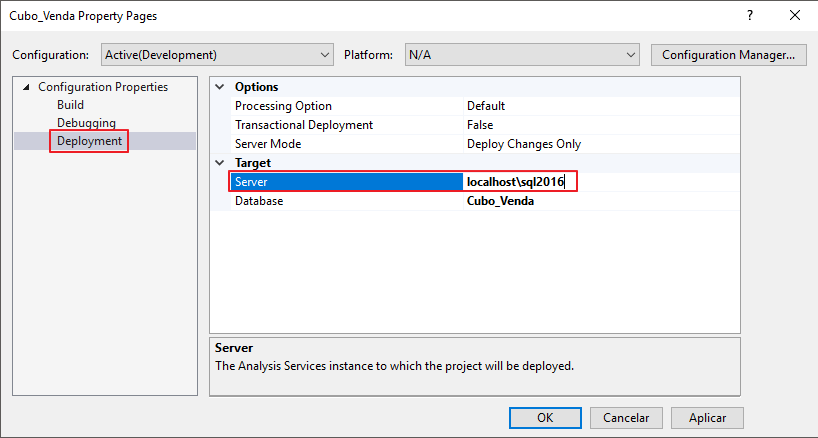

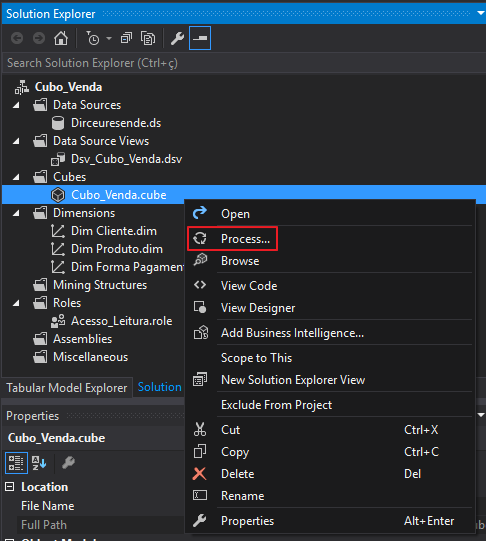

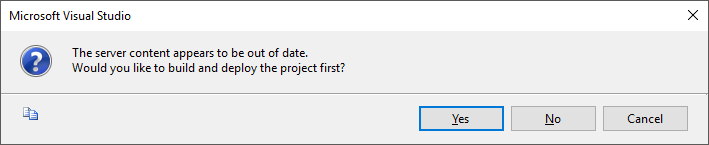

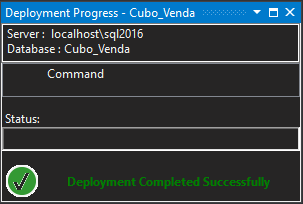

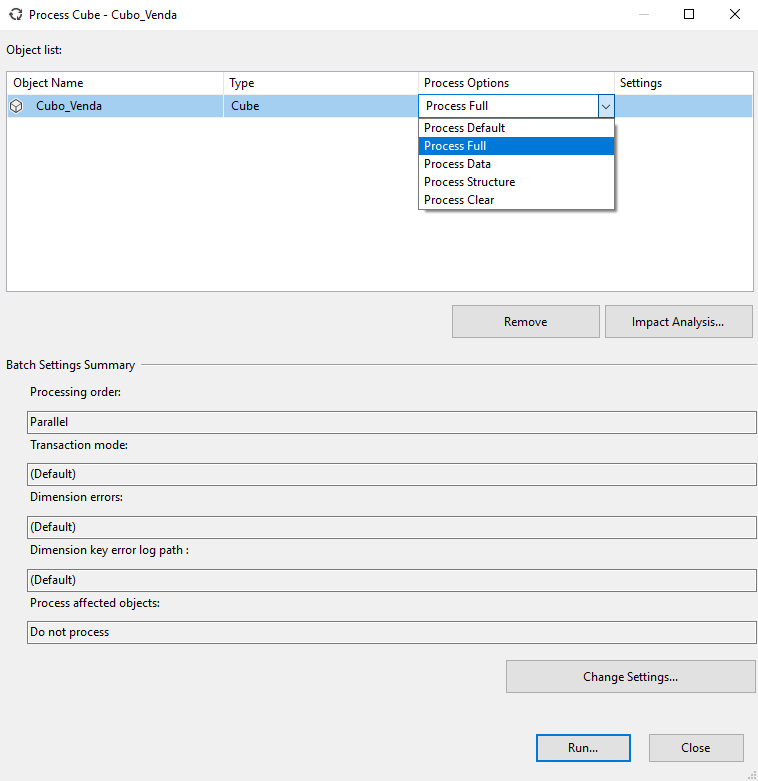

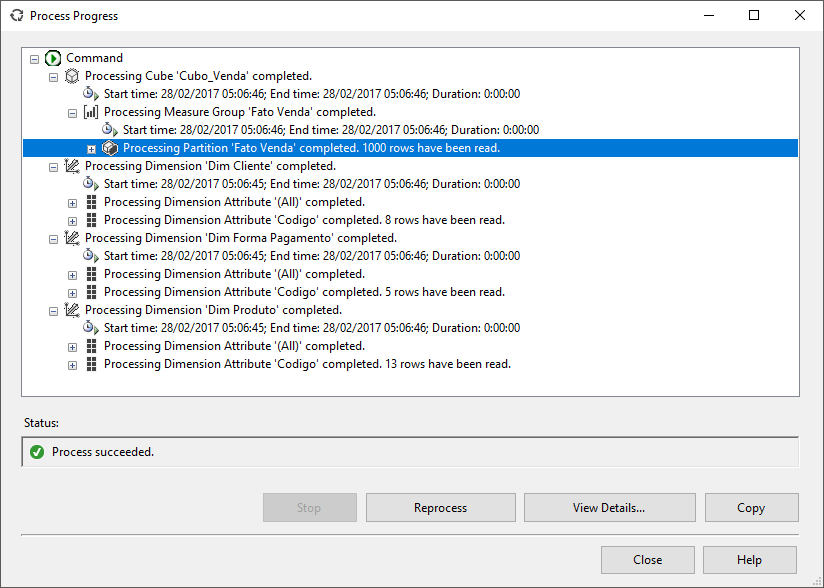

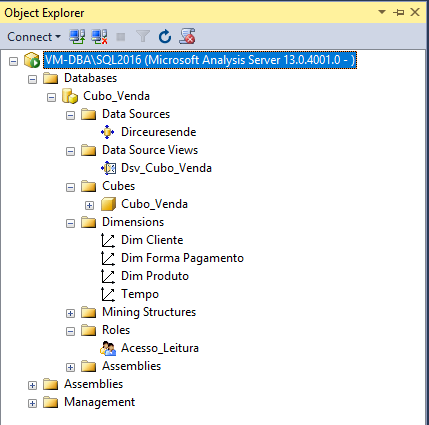

Visualizar conteúdoProcessando o Cubo e Publicando no Analysis Services

Visualizar conteúdoAnd that's it, folks!

Abraço!

Bom dia. Parabéns pelo tutorial. Mas fiquei com uma dúvida: Quando vou processar a Dimensão está dando erro de login e senha incorretos. Já conferir os dados de acesso estão corretos, o usuario é administrador do SO e os servicos do SQL Server estão todos ativos. Tem alguma ideia do que possa ser?

Dirceu, ótimo material sobre cubo, Parabéns!

Tenho uma duvida, como que faria depois que cubos estivesse pronto e quisesse inserir um novo campo, seria preciso dar UPDATE na fato com o novo campo?

Abraços!

Ei Rafa, tudo bem?

Tem que rodar o alter table na fato pra inserir o novo campo, utilizando o update posteriormente para popular os dados do novo campo.

Depois que tem atualizar o dsv no data tools, fazer os ajustes necessários no cubo e reprocessar o cubo.

Parabéns.. muito bom.

Fico feliz em saber que você gostou 🙂

Dirceu Resende…

Pô, você é o cara! Que Deus continue abençoando voce.

Desejo tudo de bom para você de verdade. Que artigo completo e incrível. Era o que eu tava precisando.

Abraços de um angolano,

Edilásio Paulo

Bom dia.

Já faz um bom tempo que procuro um material desse, eu depois de muita pesquisa, sem dúvida nenhuma esse foi o melhor e mais completo que encontrei, só tenho a agradecer esse compartilhamento de informações.

Que Deus continue abençoando muito vocês , um grade abraço.

Marcelo,

Boa tarde.

Muito obrigado pelo seu feedback!! É isso que me motiva a continuar sempre postando.